Relevant Overviews

Overview: Large language models

While this overview will eventually reflect everything tagged #llm on this Hub, my current focus is on how LLMs could best support MyHub users in particular and inhabitants of decentralised collective intelligence ecosystems in general (cf. How Artificial Intelligence will finance Collective Intelligence). This is version 1.x, 2023-04-24.

How the hell does this work?

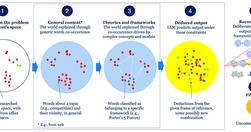

The best explanation of how it works by far comes from Jon Stokes' ChatGPT Explained: A Normie's Guide To How It Works. In essence, "each possible blob of text the model could generate ... is a single point in a probability distribution". So when you Submit a question, you're collapsing the wave function and landing on a point in that probability distribution: a collection of symbols probably related to what you inputted. That collection's content depends "on the shape of the probability distributions ... and on the dice that the ... computer’s random number generator is rolling."

So if you ask whether Schrodinger's Cat is alive or dead, you'll get different answers depending on how you ask the question not because the LLM understands anything about Schrodinger, his cat or quantum mechanics, but because amongst all "possible collections of symbols the model could produce... there are regions in the model’s probability distributions that contain collections of symbols we humans interpret to mean that the cat is alive. And ... adjacent regions ... containing collections of symbols we interpret to mean the cat is dead. ChatGPT’s latent space has been deliberately sculpted into a particular shape by...":

- Training "a foundation model on high-quality data" so it's "like an atom where the orbitals are shaped in a way we find to be useful.

- Fine-tuning "it with more focused, carefully curated training data" to reshape problematic results

- Reinforcement learning with human feedback (RLHF) to further refine the "model’s probability space so that it covers as tightly as possible only the points ... that correspond to “true facts” (whatever those are!)"

The key takeaway here is that ChatGPT is not truly talking to you, it's "just shouting language-like symbol collections into the void". And here's a good example of its spectacularly wrong hallucinations.

What the hell does it mean?

As such it is not "almost AI", it's not even close. According to Noam Chomsky, "The human mind is not... a lumbering statistical engine for pattern matching... True intelligence is demonstrated in the ability to think and express improbable but insightful things.... [and is] also capable of moral thinking", whereas ChatGPT's "moral indifference born of unintelligence... exhibits something like the banality of evil: plagiarism and apathy and obviation", summarising arguments but refusing to take a position because its creators learnt their lesson with Taybot.

Not understanding this is dangerous, as the interview/profile of Emily M. Bender in You Are Not a Parrot And a chatbot is not a human makes clear: "LLMs are great at mimicry and bad at facts... the Platonic ideal of the bullshitter... don’t care whether something is true or false... only about rhetorical power. [So] do not conflate word form and meaning. Mind your own credulity... [we've made] machines that can mindlessly generate text... we haven’t learned how to stop imagining the mind behind it."

Believing an LLM understands what it says is particularly a problem given that it was trained on words written overwhelmingly by white people, with men and wealth overrepresented (see also OpenAI’s ChatGPT Bot Recreates Racial Profiling). Bender is very good on what the safe use of artificial intelligence looks like (TL:DR; ChatGPT ain't it), covering the dehumanising effect of treating LLMs like humans, and humans like LLMs, and asking what happens when we habituate "people to treat things that seem like people as if they’re not”? Won't we all start treating real humans worse?

Answer: we might "lose a firm boundary around the idea that humans... are equally worthy", bordering on fascism: "The AI dream is governed by the perfectibility thesis... a fascist form of the human."

For more on why we must avoid a tiny number of companies dominating the field:

- "We are now facing the prospect of a significant advance in AI using methods that are not described in the scientific literature and with datasets restricted to a company that appears to be open only in name… And if history is anything to go by, we know a lack of transparency is a trigger for bad behaviour in tech spaces” - Everyone’s having a field day with ChatGPT — but nobody knows how it actually works

- "The claims re: ChatGPT by "fascinated evangelists" are simply "a distraction from the actual harm perpetuated by these systems. People get hurt from the very practical ways such models fall short in deployment", while its achievements are presented as independent of its engineers' choices, disconnecting them from human accountability - ChatGPT, Galactica, and the Progress Trap.

How can I use it?

Understanding how it does what it does is key to understanding the answer to this question, so Stokes' Normie's Guide, above, is also good here: "Isn’t the model’s ability to make things up often a feature, not a bug?".

More practically:

- in The Mechanical Professor Ethan Mollick, uni professor, puts ChatGPT through its paces. From my notes: impressive as a teacher, not great as a researcher/academic writer ("Nothing particularly wrong, but also nothing good"), reasonable summariser and general writer ("results were not brilliant, and I wouldn’t vouch for their accuracy"). So while not yet a threat to real academics, ChatGPT is a jobkiller for copywriters, almost everyone on New Grub Street and anyone else spending their days churning out rehashed content.

- learn something: give it a summary of something (or ask it so summarise, but then doublecheck for hallucinations), and then ask it to ask you questions about it and rate your answers. But doublecheck for hallucinations...

Prompt engineering

My reading queue is overflowing with identikit posts on prompt engineering, which I'll get to eventually.

AutoGPT: adding value to LLMs to create focused AI assistants

"AutoGPTs... automate multi-step projects that would otherwise require back-and-forth interactions with GPT-4... enable the chaining of thoughts to accomplish a specified objective and do so autonomously" - something I've already played with in the shape of AgentGTP, and which encapsulates how I'll integrate this into MyHub.ai (next), because this approach "transforms chat from a basic communication tool into ... AI into assistants working for you".

How should it be integrated into MyHub?

How could these LLMs be integrated into tools for thought in general, and (tomorrow's) MyHub.ai in particular? I've always wanted to access AI services from inside the MyHub thinking tool (see Thinking and writing in a decentralised collective intelligence ecosystem), from where it can apply its abilities to one's own notes. But what will that look like?

My first thought: "imagine your own personal AI assistant operating across your content - your public posts, private library (including content shared from friends, stuff in your reading queue, etc.) and the wider web, emphasising the sources you favour (based firstly on your Priority Sources, and then on the number of times you've curated their content)."

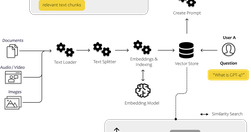

In I Built an AI Chatbot Based On My Favorite Podcast, the author shows how to do just that: "It took probably a weekend of effort" to build a chatbot to search his library "of all transcripts from the Huberman Lab podcasts, finds relevant sections and send them to GPT-3 with a carefully designed prompt".

A step-by-step guide to building a chatbot based on your own documents with GPT goes into far more detail.

Pretty soon I'll be able to do something similar, playing with ChatGPT combined with my entire Hub of over 3600 pieces of content. But while the above chatbot answers questions, I'm already pretty sure I don't want to treat ChatGPT like a search engine.

Instead, going into this experiment, ChatGPT as muse, not oracle is my overall starting point, asking "What if we were to think of LLMs not as tools for answering questions, but as tools for asking us questions and inspiring our creativity? ... ChatGPT asked me probing questions, suggested specific challenges, drew connections to related work, and inspired me to think about new corners of the problem."

Other ideas include:

- as a tutor, as in The Mechanical Professor, who "put the text of my book into ChatGPT and asked for a summary ... asked it for improvements... to write a new chapter that answered this criticism... results were not brilliant, and I wouldn’t vouch for their accuracy, but it could serve a basis for writing."

- finding me content on the web based on my interests, as represented by my notes

- auto-categorisation: I've been manually tagging stuff I like since 2002. I have not been consistent in the use of tags. Can ChatGPT help me organise my stuff?

Or should it be integrated at all?

But I'm mindful that I might not find it that useful. Many years ago, for example, I thought I wanted autosummary: click a button and get an autosummary of an article as I put it into my Hub. But that risks robbing me of any chance of learning anything from it.

Moreover, as Ted Chiang points out in ChatGPT Is a Blurry JPEG of the Web, AI should not be used as a writing tool if you're trying to write something original: "Sometimes it’s only in the process of writing that you discover your original ideas... Your first draft isn’t an unoriginal idea expressed clearly; it’s an original idea expressed poorly", accompanied by your dissatisfaction with it, which drives you to improve it. "just how much use is a blurry jpeg when you still have the original?"

Which links to a piece not tagged "llm", where Jeffrey Webber points to a central problem with Tools for Thought: "the word ‘Tool’ first causes us to focus on the tool more than the thinking... to confuse thought as an object rather than thought as a process... obsessed with managing notes, the external indicator of thought, rather than the internal process of thinking... we do less and less of the thinking and more and more of the managing."

Can AI help? Maybe, but would we be as willing to have another human do our thinking?

Relevant resources

A guide to creating the "chatbot that responds to queries based on the content of uploaded PDF or Text files... built using Langchain, FAISS (Facebook AI Similarity Search... developed by Meta for efficient similarity search and clustering of dense vectors), and OpenAI’s GPT-4... found at ai-docreader.streamlit.app...".The piece first lists use ca…

"does appealing to the (non-existent) “emotions“ of LLMs make them perform better? The answer is YES"

Rob Phillips, Founder & CEO FastlaneAI, ex-VP for Siri etc., on the "Fundamentals of Assisted Intelligence" - or where OpenAI is going with AI agents.

I asked Questy.ai, a startup, the following question: What is the best communication technique for reaching someone who believes many conspiracy theories, and sees all arguments as further evidence of conspirary?A summary of its response, which contained cited references:"When engaging with someone who holds fast to conspiracy theories and views …

ETHAN MOLLICK on "the culmination of the first phase of the AI era ... [which] ends with the ... Google’s Gemini, the first LLM model likely to beat OpenAI’s GPT-4... enough pieces ... are in place ... to see what AI can actually do, at least in the short term... [although] implications of what this phase of AI will mean for work and education is…

"My advice: Jump headfirst into AI with everything you’ve got."

Is your organization more like a jellyfish or a flatworm?The author's "Jellyfish and Flatworm story has been remarkably effective at helping ... [executives] visualize the impact of AI on their customers, their products, and their employees... this story is about why Knowledge Representation (KR) must be the core of any cost-effective long-term AI…

Something I definitely need to factor in: " Powerful AI models, such as OpenAI's GPT-4, are being bombarded by digital bots that are "extracting intelligence" in new and nefarious ways.... you can train another model based on 100,000 high quality outputs from GPT-4 ... [so] bad actors are creating bots that bombard models with questions and leave …

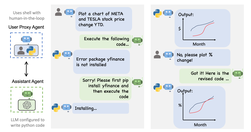

"AutoGen is a framework that enables development of LLM applications using multiple agents that can converse with each other to solve tasks. AutoGen agents are customizable, conversable, and seamlessly allow human participation. They can operate in various modes that employ combinations of LLMs, human inputs, and tools... provides a drop-in repla…

"despite the immense potential of LLMs, they come with a range of challenges... hallucinations, the high costs associated with training and scaling, the complexity of addressing and updating them, their inherent inconsistency, the difficulty of conducting audits and providing explanations, predominance of English language content... [they're also]…

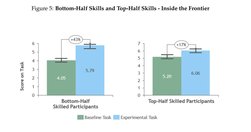

insights into the complex relationship between AI and knowledge work, emphasizing the need for a nuanced understanding of AI's role in enhancing productivity and quality in different task domains - Harpa

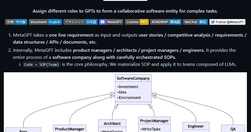

"available on Github ... can take a single line of what you want to do and turn it into ... user stories, an analysis of the competition, requirements, data structures, APIs, and documents" using a multi-AIgent framework to replicate an entire "team of product managers, architects, project managers, and engineers... $0.2 for a basic example, inclu…

"Bring AI to your browser. Chat with websites, PDFs, videos, write emails, SEO articles, tweets, automate workflows, monitor prices & data. Bing AI & Notion AI alternative... can summarize and reply to emails for you, rewrite, rephrase, correct and expand text, read articles, translate and scan web pages for data. "I started testing this browser …

"With Extensions, Bard can find and show you relevant information from the Google tools you use every day... across multiple apps and services."The personal assistant potential looks huge for users of Google services - eg "Give me a summary of my emails today".Moreover, 3rd-party extensions are on the way, starting with Adobe Firefly, Adobe’s fa…

Asks: could LLMs be used to "create tools that sift and summarize scientific evidence for policymaking... [for] knowledge brokers providing presidents, prime ministers, civil servants and politicians with up-to-date information on how science and technology intersects with societal issues... [who must] nimbly navigate ... millions of scientific pa…

How to use LLMs to solve "really hard problems"? The "AIdeas Collider" approach, piloted by Head of Innovation Design at MIT's Collective Intelligence Design Lab.

"now you can control how ChatGPT responds in every chat by using custom instructions" - this quick runthrough of what they are, how to enable them, and some examples only scratches the surface.When using custom instructions you answer two questions:tell ChatGPT more about youtell ChatGPT how you want it to respondThe two answers should probably ma…

"how can you use ChatGPT to generate ideas and brainstorm within Obsidian?", which is currently my note-taking tool of choice. First, some generic advice for prompting:"be specific about the outcome that you want to achieve... providing a prompt that contains more descriptive language ...give preceding prompts ... [specify the] responses you want.…

As wired puts it: "All sorts of apps have been strapping on AI functionality... any of it useful?"For example, email apps "like Spark and Canary are prominently bragging about their built-in AI functionality... write replies for you... generate an entire email ... summarize a long email ... or even a thread."While it sounds good, the author thinks…

To avoid "robotic, cliche AI text that sounds fake" and get "AI-generated content to emulate your unique writing style", you have to feed the AI a sample of your writing. A good prompt:"Based on the tone and writing style in the seed text, create a style guide for a blog or publication that captures the essence of the seed’s tone. Emphasize engagi…

One of my favourite writers in this area on AGI risks. "It is the rise of artificial general intelligence, or A.G.I., that worries the experts... Yet a nascent A.G.I. lobby of academics, investors and entrepreneurs counter that, once made safe, A.G.I. would be a boon to civilization... beholden to an ideology that views this new technology as inev…

Borrowing heavily from an article "about social media platform saturation inspired by the craze to sign up on Threads (only to sign out a week later).", Alberto Romero does the same for generative AI to explain "why it isn’t worth getting generative AI fatigue.After providing a potted timeline of the release of the major LLMs for the past 6 months…

Online chatbots are supposed to have "guardrails ... to prevent their systems from generating hate speech, disinformation and other toxic material. Now there is a way to easily poke holes in those safety systems... and use any of the leading chatbots to generate nearly unlimited amounts of harmful information... [using] a method gleaned from open …

A (rather MS-worshipful) introduction to "Code Interpreter... game-changing plugin inside GPT-4 that simplifies data handling and analysis by using plain English ... anyone — regardless of their technical skills — can access and interpret complex information in seconds.""Several" use cases are presented, but all are in fact data analysis and visua…

Summarises a recent Meta paper on Llama 2, "a continuation of the LLaMA... Big picture, this is a big step for the LLM ecosystem when research sharing is at an all-time low and regulatory capture at an all-time high" - so Meta continues its improbable position as good guy in the OS movement (at least when it comes to AI, but also possible in socia…

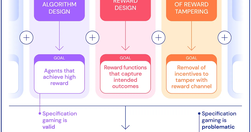

"Specification gaming: a behaviour that satisfies the literal specification of an objective without achieving the intended outcome"

"ChatGPT only looks at the past... [it's response] based on an “average” of everything already said on the issue. It’s pure reversion to the mean"

Explores the risks posed by AI in seven areas:Who will take responsibility if an AI military system goes “rogue” with devastating results? ...AI could eventually replace a quarter of the full-time jobs in the United States and Europe. Computer programmers, marketing researchers, media workers and teachers ...Privacy: The surveillance potential of …

Fantastic essay by Sara Walker, an astrobiologist and theoretical physicist, reframing AI into deep historical and evolutionary contexts.Starts by pointing out that "we are embedded in a living world, yet we do not even recognize all the life on our own Earth. For most of human history, we were unaware of the legions of bacteria living and dying .…

Etan Mollick with "A pragmatic approach to thinking about AI", taking issue with the idea that "since AI is made of software, it should be treated like other software. But AI is terrible software... We want our software to yield the same outcomes every time", which LLMs clearly don't.He makes a couple of other arguments (we don't know how they wor…