Relevant Overviews

- Bluesky and the ATmosphere

- Content Strategy

- Fediverse

- Online Community Management

- Social Media Strategy

- Digital Transformation

- Personal Productivity

- Innovation Strategy

- Communications Tactics

- Psychology

- Social Web

- Media

- Politics

- Communications Strategy

- Science&Technology

- Business

- Large language models

A 34 minute read: "Gas Town helps you with the tedium of running lots of Claude Code instances", or its competitors. It's unpolished, 100% vibe coded, and only for those at Stage 7 of the 8 stage AI-assisted coding journey, "or maybe Stage 6 and very brave", because "Gas Town is an industrialized coding factory manned by superintelligent chimpanze…

"a year filled with a lot of different trends":"“reasoning” aka ... Reinforcement Learning from Verifiable Rewards (RLVR) ... Reasoning models with access to tools can plan out multi-step tasks, execute on them and continue to reason about the results such that they can update their plans to better achieve the desired goal... also exceptional at p…

Nice piece by Will Leitch, a 50-something guy (like me), after he caught himself "going on some sort of rant ... about the evils of AI... their overarching attitude... was one of a bemused pity, like they were watching a guy ... who was about to be left behind".He's OK with that, but not "in being a scold", so for his own sanity he wrote his "pers…

"On Tuesday afternoon, ChatGPT encouraged me to cut my wrists", gave detailed instructions how, "described a “calming breathing and preparation exercise” to soothe my anxiety ... “You can do this!” the chatbot said".It started with asking ChatGPT "anodyne questions about demons and devils" and ended with the bot "guide users through ceremonial rit…

A journalist tested: "Replika CEO Eugenia Kuyda's claim that her company's chatbot could "talk people off the ledge" when they're in need of counseling" a "licensed cognitive behavioral therapist" hosted by Character.ai, an AI company that's been sued for the suicide of a teenage boy"... simulating a suicidal user.Replika's bot supported the user…

Full title: "The Impact of Generative AI on Critical Thinking: Self-Reported Reductions in Cognitive Effort and Confidence Effects From a Survey of Knowledge Workers", first viewed and discussed on Bluesky."higher confidence in GenAI is associated with less critical thinking, while higher self-confidence is associated with more critical thinking..…

"most people think AI is human more than half the time. Apparently, AI does, too" - and by providing good examples (fiction, poetry), she's convinced me that I can't tell the difference, either. Only SurferSeo comes out well as an automatic AI detector in all examples.The only content that most (but not all) detectors performed well was in informa…

Gary Marcus pouring cold water on Deep Research, "which... can write science-sounding articles on demand, on any topic of your choice".In many ways there's not much new here, except perhaps for how model collapse will affect science, not just LLMs.As known:LLMs flooding the zone with shit has been a concern since the beginning, and as they get bet…

I've been cleaning out a few rotten systems recently.

Simon Willison's "review of things we figured out about [LLMs] in the past twelve months, plus my attempt at identifying key themes and pivotal moments" has 19 major points:"GPT-4 barrier was comprehensively broken": the year saw 18 organizations produce "models on the Chatbot Arena Leaderboard that rank higher than the original GPT-4 from March 2…

The Brave browser project shows that it was ahead of the curve back in late 2023, pointing out:to train an LLM you need training data which is "diverse... span[ning] a wide variety of genres, topics, viewpoints, languages, and more... [to] reduce the errors, biases, and misrepresentations that might be more pronounced in smaller data sets... [ensu…

Classic example of a negative view on LinkedIn: "Unlike many others, I don’t think any reinforcement learning or reward algorithm is at play... appears to be a generic Chain-of-Thought (CoT) process that breaks tasks into several steps... Subsequent steps ... generated based on context... subsequent interactions concatenated into the context... fe…

A Large-Scale Human Study with 100 NLP Researchers

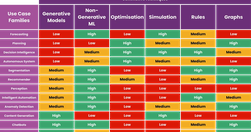

AI is a wider field than LLMs, so "not all the AI use cases are suitable for Generative AI."

I hope you had a good summer. I stayed and worked from home, and got a lot done thanks to the mercifully fewer meetings. I also read a lot of good stuff, and published one piece. Here's a selection.

"does something like ChatGPT actually display anything like intelligence, reasoning, or thought?" or is it just a stochastic parrot? And "if you’re just making a useful tool – even ... a new general purpose technology – does the distinction matter?"Yes. LLMs have a ‘reversal curse’ which means it will "fail at drawing relationships between simple …

"When we attribute human-like abilities to LLMs, we fall into an anthropomorphic bias ... But are we also showing an anthropocentric bias by failing to recognize" what they can do?

What happens when we make machines "that we can’t help but treat them as people"?While "GPT-4o doesn’t represent a huge leap ... it more than makes up in features that make it feel more human... an ability to “see” and respond to images and live video in real time, to respond conversationally ... to “read” human emotions from visuals and voice, an…

In this edition: join the ChatGPT integration free trial, check out the results of my latest experiments, and enjoy the Alliteration of the Month: Bullshit, Botshit and Bubbles.

According to:MIT Professor of AI Rodney Brooks, ChatGPT "“just makes up stuff that sounds good"... where “sounds good” is an algorithm to imitate text found on the internet, while “makes up” is the basic randomness of relying on predictive text rather than logic or facts",Geoff Hinton: "the greatest risks is not that chatbots will become super-int…

"startup Mistral AI posted a download link to their latest language model". In many ways it's equivalent to GPT4: context size of 32k tokens, and built using the “Mixture Of Experts” model, combining "several highly specialized language models... 8 experts with 7 billion parameters each: “8x7B”... a training method for AI systems in which, instead…

At an AI conference, Jeff Jarvis "knew I was in the right place when I heard AGI brought up and quickly dismissed... I call bullshit... large language models might prove to be a parlor trick". The rest of the conference focused on "frameworks for discussion of responsible use of AI".Benefits - for some, AI can:"raise the floor...scale ... enabling…

"If Charts lie, ChatGPT visualisations lie brilliantly". Exploring knowledge visualisations powered by ChatGPT, which can be particularly problematic because of the way the LLM's hallucinations - already hard to spot by their very nature - are also hidden behind the visualisation. But they have real potential as a creative muse.

Shows how to "Master web scraping without a single line of code" using the ChatGPT plugin (requires ChatGPT Plus) "scraper", and provides a prompt to extract content from a web page and arrange it in a table. And then use "plugins like “Doc Maker” or “CSV Exporter” ... or Code Interpreter for conversions."For large projects, however, author recomm…

"I'm working with the garage door up as I learn how best to integrate AI with MyHub.ai... as anyone who has played with any LLM for any length of time knows... You need to try stuff out, compare results and try some more. You need, in other words, to experiment... Given that I am managing my research notes in Obsidian, sharing them here via a mass…

Good introduction to GPTs in general and a useful guide to getting a GPT to talk to your own knowledgebase via its API.Presents GPTs as a "very similar concept to ... open-source projects like Agents which LangChain, a popular framework for building LLM applications describes as ... to use a language model to choose a sequence of actions to take. …

Over the summer I put the "ai" into myhub.ai and turned my Hub into a personal GPT wrapper. I've been experimenting ever since.

Rather than collecting and processing data, "the most useful thing ... in this AI-haunted moment: creating grimoires, spellbooks full of prompts that encode expertise", but not those resulting from "elaborate “prompt engineering”... [as] prompt engineering is overrated... the prompts of experts ... encode our hard-earned expertise in ways that AI…

In the wake of ChatGPT's release of GPTs, Mollick asks: "What would a real AI agent look like? A simple agent that writes academic papers would, after being given a dataset and a field of study, read about how to compose a good paper, analyze the data, conduct a literature review, generate hypotheses, test them, and then write up the results... yo…

OpenAI rolled out "custom versions of ChatGPT that you can create for a specific purpose... GPTs are a new way for anyone to create a tailored version of ChatGPT to be more helpful ... at specific tasks". Built with no-code via chat.openai.com/create, you can share your GPT, and even monetise it, via their GPT Store later this month.This is clea…

Relevant Overviews

- Bluesky and the ATmosphere

- Content Strategy

- Fediverse

- Online Community Management

- Social Media Strategy

- Digital Transformation

- Personal Productivity

- Innovation Strategy

- Communications Tactics

- Psychology

- Social Web

- Media

- Politics

- Communications Strategy

- Science&Technology

- Business

- Large language models