Relevant Overviews

- Bluesky and the ATmosphere

- Content Strategy

- Fediverse

- Online Community Management

- Social Media Strategy

- Digital Transformation

- Personal Productivity

- Innovation Strategy

- Communications Tactics

- Psychology

- Social Web

- Media

- Politics

- Communications Strategy

- Science&Technology

- Business

- Large language models

Overview: Large language models

While this overview will eventually reflect everything tagged #llm on this Hub, my current focus is on how LLMs could best support MyHub users in particular and inhabitants of decentralised collective intelligence ecosystems in general (cf. How Artificial Intelligence will finance Collective Intelligence). This is version 1.x, 2023-04-24.

How the hell does this work?

The best explanation of how it works by far comes from Jon Stokes' ChatGPT Explained: A Normie's Guide To How It Works. In essence, "each possible blob of text the model could generate ... is a single point in a probability distribution". So when you Submit a question, you're collapsing the wave function and landing on a point in that probability distribution: a collection of symbols probably related to what you inputted. That collection's content depends "on the shape of the probability distributions ... and on the dice that the ... computer’s random number generator is rolling."

So if you ask whether Schrodinger's Cat is alive or dead, you'll get different answers depending on how you ask the question not because the LLM understands anything about Schrodinger, his cat or quantum mechanics, but because amongst all "possible collections of symbols the model could produce... there are regions in the model’s probability distributions that contain collections of symbols we humans interpret to mean that the cat is alive. And ... adjacent regions ... containing collections of symbols we interpret to mean the cat is dead. ChatGPT’s latent space has been deliberately sculpted into a particular shape by...":

- Training "a foundation model on high-quality data" so it's "like an atom where the orbitals are shaped in a way we find to be useful.

- Fine-tuning "it with more focused, carefully curated training data" to reshape problematic results

- Reinforcement learning with human feedback (RLHF) to further refine the "model’s probability space so that it covers as tightly as possible only the points ... that correspond to “true facts” (whatever those are!)"

The key takeaway here is that ChatGPT is not truly talking to you, it's "just shouting language-like symbol collections into the void". And here's a good example of its spectacularly wrong hallucinations.

What the hell does it mean?

As such it is not "almost AI", it's not even close. According to Noam Chomsky, "The human mind is not... a lumbering statistical engine for pattern matching... True intelligence is demonstrated in the ability to think and express improbable but insightful things.... [and is] also capable of moral thinking", whereas ChatGPT's "moral indifference born of unintelligence... exhibits something like the banality of evil: plagiarism and apathy and obviation", summarising arguments but refusing to take a position because its creators learnt their lesson with Taybot.

Not understanding this is dangerous, as the interview/profile of Emily M. Bender in You Are Not a Parrot And a chatbot is not a human makes clear: "LLMs are great at mimicry and bad at facts... the Platonic ideal of the bullshitter... don’t care whether something is true or false... only about rhetorical power. [So] do not conflate word form and meaning. Mind your own credulity... [we've made] machines that can mindlessly generate text... we haven’t learned how to stop imagining the mind behind it."

Believing an LLM understands what it says is particularly a problem given that it was trained on words written overwhelmingly by white people, with men and wealth overrepresented (see also OpenAI’s ChatGPT Bot Recreates Racial Profiling). Bender is very good on what the safe use of artificial intelligence looks like (TL:DR; ChatGPT ain't it), covering the dehumanising effect of treating LLMs like humans, and humans like LLMs, and asking what happens when we habituate "people to treat things that seem like people as if they’re not”? Won't we all start treating real humans worse?

Answer: we might "lose a firm boundary around the idea that humans... are equally worthy", bordering on fascism: "The AI dream is governed by the perfectibility thesis... a fascist form of the human."

For more on why we must avoid a tiny number of companies dominating the field:

- "We are now facing the prospect of a significant advance in AI using methods that are not described in the scientific literature and with datasets restricted to a company that appears to be open only in name… And if history is anything to go by, we know a lack of transparency is a trigger for bad behaviour in tech spaces” - Everyone’s having a field day with ChatGPT — but nobody knows how it actually works

- "The claims re: ChatGPT by "fascinated evangelists" are simply "a distraction from the actual harm perpetuated by these systems. People get hurt from the very practical ways such models fall short in deployment", while its achievements are presented as independent of its engineers' choices, disconnecting them from human accountability - ChatGPT, Galactica, and the Progress Trap.

How can I use it?

Understanding how it does what it does is key to understanding the answer to this question, so Stokes' Normie's Guide, above, is also good here: "Isn’t the model’s ability to make things up often a feature, not a bug?".

More practically:

- in The Mechanical Professor Ethan Mollick, uni professor, puts ChatGPT through its paces. From my notes: impressive as a teacher, not great as a researcher/academic writer ("Nothing particularly wrong, but also nothing good"), reasonable summariser and general writer ("results were not brilliant, and I wouldn’t vouch for their accuracy"). So while not yet a threat to real academics, ChatGPT is a jobkiller for copywriters, almost everyone on New Grub Street and anyone else spending their days churning out rehashed content.

- learn something: give it a summary of something (or ask it so summarise, but then doublecheck for hallucinations), and then ask it to ask you questions about it and rate your answers. But doublecheck for hallucinations...

Prompt engineering

My reading queue is overflowing with identikit posts on prompt engineering, which I'll get to eventually.

AutoGPT: adding value to LLMs to create focused AI assistants

"AutoGPTs... automate multi-step projects that would otherwise require back-and-forth interactions with GPT-4... enable the chaining of thoughts to accomplish a specified objective and do so autonomously" - something I've already played with in the shape of AgentGTP, and which encapsulates how I'll integrate this into MyHub.ai (next), because this approach "transforms chat from a basic communication tool into ... AI into assistants working for you".

How should it be integrated into MyHub?

How could these LLMs be integrated into tools for thought in general, and (tomorrow's) MyHub.ai in particular? I've always wanted to access AI services from inside the MyHub thinking tool (see Thinking and writing in a decentralised collective intelligence ecosystem), from where it can apply its abilities to one's own notes. But what will that look like?

My first thought: "imagine your own personal AI assistant operating across your content - your public posts, private library (including content shared from friends, stuff in your reading queue, etc.) and the wider web, emphasising the sources you favour (based firstly on your Priority Sources, and then on the number of times you've curated their content)."

In I Built an AI Chatbot Based On My Favorite Podcast, the author shows how to do just that: "It took probably a weekend of effort" to build a chatbot to search his library "of all transcripts from the Huberman Lab podcasts, finds relevant sections and send them to GPT-3 with a carefully designed prompt".

A step-by-step guide to building a chatbot based on your own documents with GPT goes into far more detail.

Pretty soon I'll be able to do something similar, playing with ChatGPT combined with my entire Hub of over 3600 pieces of content. But while the above chatbot answers questions, I'm already pretty sure I don't want to treat ChatGPT like a search engine.

Instead, going into this experiment, ChatGPT as muse, not oracle is my overall starting point, asking "What if we were to think of LLMs not as tools for answering questions, but as tools for asking us questions and inspiring our creativity? ... ChatGPT asked me probing questions, suggested specific challenges, drew connections to related work, and inspired me to think about new corners of the problem."

Other ideas include:

- as a tutor, as in The Mechanical Professor, who "put the text of my book into ChatGPT and asked for a summary ... asked it for improvements... to write a new chapter that answered this criticism... results were not brilliant, and I wouldn’t vouch for their accuracy, but it could serve a basis for writing."

- finding me content on the web based on my interests, as represented by my notes

- auto-categorisation: I've been manually tagging stuff I like since 2002. I have not been consistent in the use of tags. Can ChatGPT help me organise my stuff?

Or should it be integrated at all?

But I'm mindful that I might not find it that useful. Many years ago, for example, I thought I wanted autosummary: click a button and get an autosummary of an article as I put it into my Hub. But that risks robbing me of any chance of learning anything from it.

Moreover, as Ted Chiang points out in ChatGPT Is a Blurry JPEG of the Web, AI should not be used as a writing tool if you're trying to write something original: "Sometimes it’s only in the process of writing that you discover your original ideas... Your first draft isn’t an unoriginal idea expressed clearly; it’s an original idea expressed poorly", accompanied by your dissatisfaction with it, which drives you to improve it. "just how much use is a blurry jpeg when you still have the original?"

Which links to a piece not tagged "llm", where Jeffrey Webber points to a central problem with Tools for Thought: "the word ‘Tool’ first causes us to focus on the tool more than the thinking... to confuse thought as an object rather than thought as a process... obsessed with managing notes, the external indicator of thought, rather than the internal process of thinking... we do less and less of the thinking and more and more of the managing."

Can AI help? Maybe, but would we be as willing to have another human do our thinking?

Relevant resources

[EN translation] of Damien van Achter's "semi-automated monitoring and publishing system"This is essentially an automated, LLM-driven version of my content pipeline, where the AI actually writes and (following a human check) publishes the text to multiple platforms. The full process is 9 steps long, from (what I call) priority source identificatio…

A 34 minute read: "Gas Town helps you with the tedium of running lots of Claude Code instances", or its competitors. It's unpolished, 100% vibe coded, and only for those at Stage 7 of the 8 stage AI-assisted coding journey, "or maybe Stage 6 and very brave", because "Gas Town is an industrialized coding factory manned by superintelligent chimpanze…

"a year filled with a lot of different trends":"“reasoning” aka ... Reinforcement Learning from Verifiable Rewards (RLVR) ... Reasoning models with access to tools can plan out multi-step tasks, execute on them and continue to reason about the results such that they can update their plans to better achieve the desired goal... also exceptional at p…

Nice piece by Will Leitch, a 50-something guy (like me), after he caught himself "going on some sort of rant ... about the evils of AI... their overarching attitude... was one of a bemused pity, like they were watching a guy ... who was about to be left behind".He's OK with that, but not "in being a scold", so for his own sanity he wrote his "pers…

"On Tuesday afternoon, ChatGPT encouraged me to cut my wrists", gave detailed instructions how, "described a “calming breathing and preparation exercise” to soothe my anxiety ... “You can do this!” the chatbot said".It started with asking ChatGPT "anodyne questions about demons and devils" and ended with the bot "guide users through ceremonial rit…

A journalist tested: "Replika CEO Eugenia Kuyda's claim that her company's chatbot could "talk people off the ledge" when they're in need of counseling" a "licensed cognitive behavioral therapist" hosted by Character.ai, an AI company that's been sued for the suicide of a teenage boy"... simulating a suicidal user.Replika's bot supported the user…

Full title: "The Impact of Generative AI on Critical Thinking: Self-Reported Reductions in Cognitive Effort and Confidence Effects From a Survey of Knowledge Workers", first viewed and discussed on Bluesky."higher confidence in GenAI is associated with less critical thinking, while higher self-confidence is associated with more critical thinking..…

"most people think AI is human more than half the time. Apparently, AI does, too" - and by providing good examples (fiction, poetry), she's convinced me that I can't tell the difference, either. Only SurferSeo comes out well as an automatic AI detector in all examples.The only content that most (but not all) detectors performed well was in informa…

Gary Marcus pouring cold water on Deep Research, "which... can write science-sounding articles on demand, on any topic of your choice".In many ways there's not much new here, except perhaps for how model collapse will affect science, not just LLMs.As known:LLMs flooding the zone with shit has been a concern since the beginning, and as they get bet…

I've been cleaning out a few rotten systems recently.

Simon Willison's "review of things we figured out about [LLMs] in the past twelve months, plus my attempt at identifying key themes and pivotal moments" has 19 major points:"GPT-4 barrier was comprehensively broken": the year saw 18 organizations produce "models on the Chatbot Arena Leaderboard that rank higher than the original GPT-4 from March 2…

The Brave browser project shows that it was ahead of the curve back in late 2023, pointing out:to train an LLM you need training data which is "diverse... span[ning] a wide variety of genres, topics, viewpoints, languages, and more... [to] reduce the errors, biases, and misrepresentations that might be more pronounced in smaller data sets... [ensu…

Classic example of a negative view on LinkedIn: "Unlike many others, I don’t think any reinforcement learning or reward algorithm is at play... appears to be a generic Chain-of-Thought (CoT) process that breaks tasks into several steps... Subsequent steps ... generated based on context... subsequent interactions concatenated into the context... fe…

A Large-Scale Human Study with 100 NLP Researchers

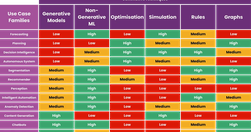

AI is a wider field than LLMs, so "not all the AI use cases are suitable for Generative AI."

I hope you had a good summer. I stayed and worked from home, and got a lot done thanks to the mercifully fewer meetings. I also read a lot of good stuff, and published one piece. Here's a selection.

"does something like ChatGPT actually display anything like intelligence, reasoning, or thought?" or is it just a stochastic parrot? And "if you’re just making a useful tool – even ... a new general purpose technology – does the distinction matter?"Yes. LLMs have a ‘reversal curse’ which means it will "fail at drawing relationships between simple …

"When we attribute human-like abilities to LLMs, we fall into an anthropomorphic bias ... But are we also showing an anthropocentric bias by failing to recognize" what they can do?

What happens when we make machines "that we can’t help but treat them as people"?While "GPT-4o doesn’t represent a huge leap ... it more than makes up in features that make it feel more human... an ability to “see” and respond to images and live video in real time, to respond conversationally ... to “read” human emotions from visuals and voice, an…

In this edition: join the ChatGPT integration free trial, check out the results of my latest experiments, and enjoy the Alliteration of the Month: Bullshit, Botshit and Bubbles.

According to:MIT Professor of AI Rodney Brooks, ChatGPT "“just makes up stuff that sounds good"... where “sounds good” is an algorithm to imitate text found on the internet, while “makes up” is the basic randomness of relying on predictive text rather than logic or facts",Geoff Hinton: "the greatest risks is not that chatbots will become super-int…

"startup Mistral AI posted a download link to their latest language model". In many ways it's equivalent to GPT4: context size of 32k tokens, and built using the “Mixture Of Experts” model, combining "several highly specialized language models... 8 experts with 7 billion parameters each: “8x7B”... a training method for AI systems in which, instead…

At an AI conference, Jeff Jarvis "knew I was in the right place when I heard AGI brought up and quickly dismissed... I call bullshit... large language models might prove to be a parlor trick". The rest of the conference focused on "frameworks for discussion of responsible use of AI".Benefits - for some, AI can:"raise the floor...scale ... enabling…

"If Charts lie, ChatGPT visualisations lie brilliantly". Exploring knowledge visualisations powered by ChatGPT, which can be particularly problematic because of the way the LLM's hallucinations - already hard to spot by their very nature - are also hidden behind the visualisation. But they have real potential as a creative muse.

Shows how to "Master web scraping without a single line of code" using the ChatGPT plugin (requires ChatGPT Plus) "scraper", and provides a prompt to extract content from a web page and arrange it in a table. And then use "plugins like “Doc Maker” or “CSV Exporter” ... or Code Interpreter for conversions."For large projects, however, author recomm…

"I'm working with the garage door up as I learn how best to integrate AI with MyHub.ai... as anyone who has played with any LLM for any length of time knows... You need to try stuff out, compare results and try some more. You need, in other words, to experiment... Given that I am managing my research notes in Obsidian, sharing them here via a mass…

Good introduction to GPTs in general and a useful guide to getting a GPT to talk to your own knowledgebase via its API.Presents GPTs as a "very similar concept to ... open-source projects like Agents which LangChain, a popular framework for building LLM applications describes as ... to use a language model to choose a sequence of actions to take. …

Over the summer I put the "ai" into myhub.ai and turned my Hub into a personal GPT wrapper. I've been experimenting ever since.

Rather than collecting and processing data, "the most useful thing ... in this AI-haunted moment: creating grimoires, spellbooks full of prompts that encode expertise", but not those resulting from "elaborate “prompt engineering”... [as] prompt engineering is overrated... the prompts of experts ... encode our hard-earned expertise in ways that AI…

In the wake of ChatGPT's release of GPTs, Mollick asks: "What would a real AI agent look like? A simple agent that writes academic papers would, after being given a dataset and a field of study, read about how to compose a good paper, analyze the data, conduct a literature review, generate hypotheses, test them, and then write up the results... yo…

Relevant Overviews

- Bluesky and the ATmosphere

- Content Strategy

- Fediverse

- Online Community Management

- Social Media Strategy

- Digital Transformation

- Personal Productivity

- Innovation Strategy

- Communications Tactics

- Psychology

- Social Web

- Media

- Politics

- Communications Strategy

- Science&Technology

- Business

- Large language models