ChatGPT Explained: A Normie's Guide To How It Works

my notes ( ? )

Jon Stokes thinks "people are talking about this chatbot in unhelpful ways... anthropomorphizing ... [and] not working with a practical, productive understanding of what the bot’s main parts are and how they fit together."

So he wrote this explainer.

"At the heart of ChatGPT is a large language model (LLM) that belongs to the family of generative machine learning models... a function that can take a structured collection of symbols as input and produce a related structured collection of symbols as output... [like] Letters in a word, Words in a sentence, Pixels in an image, Frames in a video... The hard and expensive part ... is hidden deep inside the word related... the more abstract and subtle the relationship, the more technology we’ll need".

He provides 4 examples of relationships between 2 concepts:

- "relating the collections {cat} and {at-cay}... a standard Pig Latin transformation ... managed with a simple, handwritten rule set...

- {cat} to {dog}...

- {the cat is alive} to {the cat is dead}... [invoking] an intertextual reference to Schrödinger’s Cat...

- {the cat is immature} vs. {the cat is mature}... Since it’s a cat, “immature” is probably ... “juvenile”, [but] If ... a human, the sentence would more likely be invoking a cluster of concepts around age-appropriate behavior...

as the number of possible relationships increases, the qualities of the relationships themselves increase in abstraction, complexity, and subtlety... [In this] bewildering, densely connected network of concepts", some relationships are more likely than others, introducing an element of probability.

For example, "we’re talking about a cat, it’s more likely that the mature/immature dichotomy is related to a cluster of concepts around physical development and less likely that it’s related to a cluster of concepts around emotional or intellectual development", but it still might me about the latter.

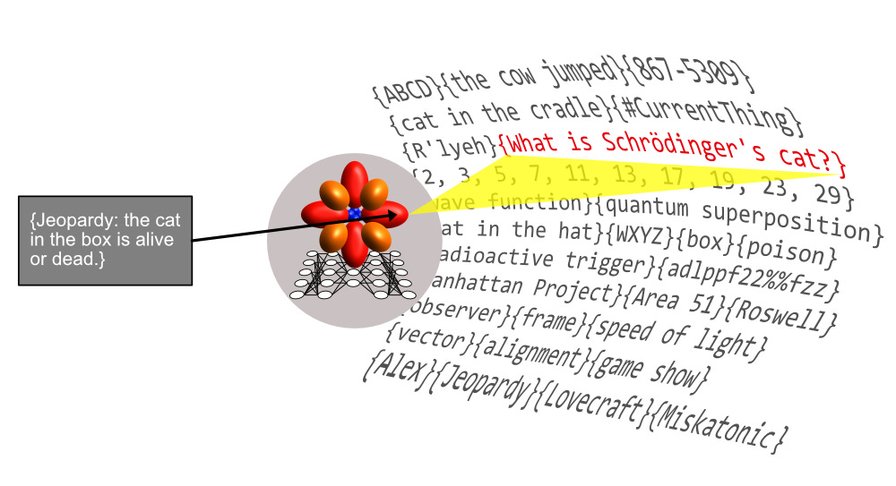

In summary, "When the relationships among collections of symbols are complex and stochastic, then throwing more storage and computing power at the problem of relating one collection to another enables you to relate those collections in ever richer and more complex ways." He then introduces the concept of probability distribution using the atomic orbitals of hydrogen atoms(!).

Why? For LLMs, "each possible blob of text the model could generate ... is a single point in a probability distribution". So when you write a text and hit "Submit", you're collapsing the wave function, resulting "in an observation of a single collection of symbols", so sometimes you arrive at "a point in the probability distribution ... like, {The cat is alive}, and at other times you’ll end up at a point that corresponds to {The cat is dead}... depending on the shape of the probability distributions ... and on the dice that the ... computer’s random number generator is rolling."

Instead of thinking that the model knows the cat's status, understand that "In the space of all the possible collections of symbols the model could produce... there are regions in the model’s probability distributions that contain collections of symbols we humans interpret to mean that the cat is alive. And ... adjacent regions ... containing collections of symbols we interpret to mean the cat is dead... ChatGPT’s latent space — i.e., the space of possible outputs ... has been deliberately sculpted into a particular shape by an expensive training process".

Hence hallucinations. Bug or feature?

Different input collections (prompts) will result in different output collections (responses), which is why we can ask the same question in different ways and get different answers: we’re landing on different points in a probability distribution.

So can we "eliminate or at least shrink the probability distribution" of untrue statements? And should we?

We have three tools:

- Training "a foundation model on high-quality data" so it's "like an atom where the orbitals are shaped in a way we find to be useful.

- Fine-tuning "it with more focused, carefully curated training data" to reshape problematic results

- Reinforcement learning with human feedback (RLHF) to further refine the "model’s probability space so that it covers as tightly as possible only the points ... that correspond to “true facts” (whatever those are!)"

Which raises some interesting questions.

- Who gets to refine the model and thus define truth? Another echo of the fundamental issues regarding tackling disinfo. "Are they really interested in the truth, or are they just trying to sculpt this model’s probability distributions to serve their own ends?"

- "do I really always want the truth? ... Isn’t the model’s ability to make things up often a feature, not a bug?"

Only human beings can interpret the meaning of a text. LLMs are not an author and have no intent, so there is no authorial intent, and so perhaps represent "humanity’s first practical use for reader response theory... ChatGPT doesn’t think about you" its chat-based UX is just "a kind of skeuomorphic UI affordance", and is not "truly talking to you... just shouting language-like symbol collections into the void".

To understand this better, understand token windows: "pre-ChatGPT language models ... take in tokens (symbols) and spit out tokens. The window is the number of tokens a model can ingest... the probability space does not change shape in response to the tokens... weights remain static ... It doesn’t remember", which is why "GPT-3 purposefully inject randomness".

ChatGPT, however, does remember, "appending the output of each new exchange to the existing output so that the content of the token window grows" While it can access the entire chat history, that's all it knows about you. The token window is therefore a "shared, mutable state" that the model and I develop together, giving the model more input to find the most relevant word sequence for me."

BingChat seems to include a websearch too.

The upcoming "32K-token window [is]... enough tokens to really load up the model with fresh facts, like customer service histories, book chapters or scripts, action sequences, and many other things."

Read the Full Post

The above notes were curated from the full post www.jonstokes.com/p/chatgpt-explained-a-guide-for-normies?utm_source=pocket_saves.Related reading

More Stuff I Like

More Stuff tagged llm , chatgpt , explainer , bingchat , ai

See also: Content Strategy , Digital Transformation , Innovation Strategy , Communications Tactics , Media , Science&Technology , Large language models