Trust takes time

my notes ( ? )

"Only people can trust, but only machines scale well. Today’s websites and apps are built to compensate for an absence of trust, rather than to support its growth."

Instead we have the 'I accept' button: "we know we are lying the moment we touch it, as does the author of the legalese no one expects anyone to read. Navigating a trustless world is a heavy price to pay... [but] it doesn’t have to be like this... machines can help us ... evolve our relationships by handling the fiddly bits on our behalf... data technology is fundamentally trust technology... a transversal layer within any ecosystem".

A really beautifully written essay on trust, using the author's experience as a father to make it personal. Trust is "an inherently human relationship that anchors our presence into a changing world".

The problem: "There are no winners when there is no trust and when it is cumbersome or difficult to establish sustainable trust relationships". For example: consent dialogs.

While we probably want to buy a service, those selling the service actually want our data, a relationship which "more closely resembles parasitism rather than commensalism... a short-term game that hurts both parties in the long term". As a result:

- "companies are nowhere near the most valuable data points ...

- people are getting almost no value out of their own data... we limit the value of our own data to what we can do with it ourselves, "

One reason is the blind belief that the only issue at stake here is privacy, but "Nobody protects their home from burglars by cementing all the doors shut... we still need access" to our house, and our online services.

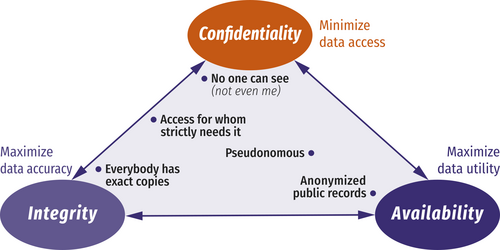

So to get "our data to work for us", let's disentangle the privacy issue "into three nuanced sliders in the so-called CIA triad... Confidentiality... balances against the desired Availability of the data, as well as the Integrity with which that data is stored and exchanged":

You can't have all three. You can have none.

"Our availability problems remain still so much bigger than our confidentiality issues. We’re missing out on enormous value from potential usage of our data."

Ironically, despite the fact that "most people think they want privacy, and a lot of companies are stealing personal data", the truth is that "People need more than privacy [see above, and] ... Companies don’t want to steal data."

The author really dissects the many ways consent dialogs are failures - a selection:

- consent is "not an approach for building trust, but rather an explicit acknowledgment of a pre-existing relationship or even lack thereof... [so] when you first visit a website, consent serves as a ... mutual affirmation of distrust"

- "consent ... usually only given when you already trust the other person... [it] cannot meaningfully predate trust"

- legally, consent requires the "agreement to be informed and freely given" - but does anyone understand the consent agreements they accept?

In summary: "consent ... cannot be automated... Only humans can consent".

The thing is, there's so much value in our value for us: "I do want my supermarket to know that my microwave oven is broken, and that I have 7 people over for dinner tonight, and also I came by bike... I have a cat that only likes a specific brand of tuna, which obviously I forgot".

But an upfront consent form cannot cover all this because when I click it I don't know what the future holds. We need "systems that recognize we don’t yet know where we’re going", and that trust is incremental, so "each exchange of data only requires the trust pertaining to that specific exchange... [including] history of the data, indicating its origin and reliability... policies ... describing the allowed usage. The encapsulating trust envelope protects the person [by] ... facilitates correct processing of the correct data [and] protects the company... provides them with specific, legally valid proof ... [for] automated legal audits."

This allows the trust relationship to evolve, rather than users giving their trust before the relationship can even start. It also means companies can gain users' trust and then ask for "more and better data... new mutually beneficial data points that most company lawyers had long given up on."

While this means "replacing one giant consent dialog by tons of tiny dialogs", these will be "managed automatically ... according to my preferences", which I pick from the CIA triad, thus avoiding "consent fatigue ... the polar opposite of what GDPR intended ...: people have (the illusion of) choice, but exercising this right is so cumbersome that many would rather not have it."

This is because GDPR is framed around the idea "that every software system is conspiring against citizens" which, while generally true today, "prematurely kills innovation towards a future where some systems work for people".

GDPR does foresee

multiple possible legal grounds for the exchange of data", of which consent is just one, so "Our digital assistant could thus leverage the other mechanisms when appropriate... and resort to consent for cases we distinctly flagged beforehand".

In conclusion, GDPR is "making it marginally more expensive to do the wrong thing... [and] substantially more expensive to do the right thing... [We need] a new generation of techno-legal systems... [to] set up and maintain long-term relationships and negotiate mutual benefit

Read the Full Post

The above notes were curated from the full post ruben.verborgh.org/blog/2024/10/15/trust-takes-time/?utm_source=pocket_shared.Related reading

More Stuff I Like

More Stuff tagged trust , ai4communities , consent , privacy , gdpr , regulation , ruben verborgh

See also: Online Community Management , Social Media Strategy , Social Web , Politics