AI models collapse when trained on recursively generated data

my notes ( ? )

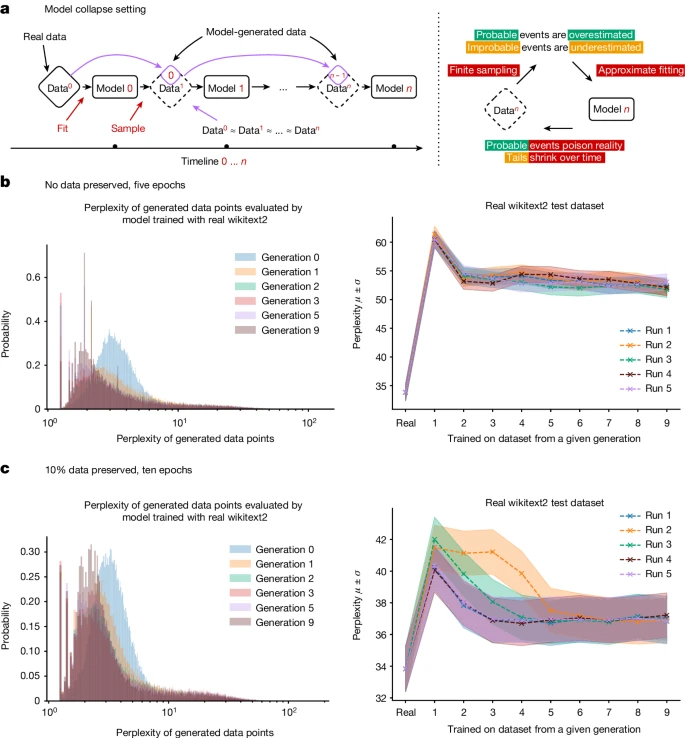

What happens to generative AI once they themselves "contribute much of the text found online...? indiscriminate use of model-generated content in training causes irreversible defects ... tails of the original content distribution disappear. We refer to this effect as ‘model collapse’ ... a degenerative process ... models forget the true underlying data distribution"

Implications?

- "it must be taken seriously if we are to sustain the benefits of training from large-scale data scraped from the web"

- data on "genuine human interactions ... will be increasingly valuable" - hence AI for communities.

The full scientific paper is of course long and dense. Some key points from a high-level scan:

- "two special cases: early model collapse and late model collapse"

- "More approximation power can even be a double-edged sword... may counteract statistical noise... but it can equally compound the noise.

- More often than not, we get a cascading effect, in which individual inaccuracies combine to cause the overall error to grow "

- "the process of model collapse is universal among generative models that recursively train on data generated by previous generations."

Interestingly: in a sense, this is not new - for example, "click, content and troll farms [are] a form of human ‘language models’... to misguide social networks and search algorithms." What's new is the scale allowed by LLM-driven poisoning attacks of LLMs - a self-poisoning Ourobouros.

And because it's difficult to differentiate LLM-generated content on the web, today's genAI companies have a ‘first mover advantage’. A newcomer would not have access to "pure" training content, unless OpenAI et al were forced to share.

Read the Full Post

The above notes were curated from the full post www.nature.com/articles/s41586-024-07566-y.Related reading

More Stuff I Like

More Stuff tagged nature magazine , ai4communities , ourobouros , model collapse , ai

See also: Digital Transformation , Innovation Strategy , Science&Technology