I read almost 50 articles on Fake News so you don’t have to (Topics, Dec 15; updated)

A work in progress from an upcoming eponymous post.

Another experiment with the enewsletter format: some initial thoughts on this seemingly intractable problem, with some of the source materials I’m studying.

(update, Dec 17: literally hours after I published this, Facebook made it’s Big Announcement. I’ve integrated it, partly digested, below).

As ever, browse all issues & subscribe.

...

I probably don’t need to tell you that fake news is the topic of the moment - it’s as if this is a just-discovered Brand New Problem. Just try telling that to a Brit (see image, above).

So, not a new problem, then. And if you think it’s limited to the Anglo-Saxon world, I have some very bad news for you.

Backstory

Online, I first came across fake news in 2007 as I explored the weird and frightening world of Eurosceptic echo chambers. Back then they weren’t algorithmically driven, so I figured that although they were a problem, they wouldn’t be a mainstream problem.

Which is probably why Eli Pariser’s 2011 book on the emergence of filter bubbles - echo chambers reinforced by social media algorithms like Facebook’s Newsfeed - made such an impact on me. Because whenever someone discovers how to make a fortune out of human psychology, problems generally result. They did.

(update: Eli Pariser created a Slack Channel for discussing solutions - see you there, but start here:

A number of the ideas below have significant flaws. It’s not a simple problem to solve – some of the things that would pull down false news would also pull down news in general. But we’re in brainstorm mode.

- Media ReDesign: The New Realities.)

Until the 2016 US election, however, this remained a niche topic: while I’ve been reading & tagged resources filter bubble and fake for several years, the vast majority of the latter were published only recently.

So, what did I learn?

Sorry, wrong question - try: “what am I reading?”. This enewsletter edition is me rereading and reflecting on those resources, as part of my recently tweaked personal content strategy, where I write these newsletters every few weeks to help me absorb and digest the stuff I read every day, before writing a (hopefully) original post of my own.

All I know as I start is there will be no easy solutions - this is me trying to understand the key questions.

Although it’s more of a shared, annotated reading list than anything else, I do hope you get as much out of reading it as I did writing it - every article I quote below is well worth your time, and I do include some first thoughts.

You know it when you see it, right?

Firstly, what is fake news?

not much drives traffic as effectively as stories that vindicate and/or inflame the biases of their readers…

- What was fake on the Internet this week: Why this is the final column (December 2015)

(So fake news is stuff that’s made to be shared on the internet? But isn’t everyone trying to get their stuff shared on the internet?)

analysis of six hyperpartisan Facebook pages found that posts with mostly false content or no facts fared better than their truthful counterparts

- Facebook’s fake news problem won’t fix itself

We presented approximately 800 participants across four studies with statements ranging from the mundane to the meaningful. We included some bullshit too…

- Most of the information we spread online is quantifiably “bullshit”

mainstream media and polling systems underestimated the power of alt-right news sources and smaller conservative sites that largely rely on Facebook … Before social media, the filter was provided by media companies…

- Facebook’s failure: did fake news and polarized politics get Trump elected?

(So it’s stuff that’s doing well on Facebook / the internet that’s not true? But who determines truth here?)

… government propaganda designed to look like independent journalism… any old made-up bullshit … a hoax meant to make a larger point… [or only] when it shows up on a platform like Facebook as legitimate news? What about conspiracy theorists … satire intended to entertain… a real news organization that gets it wrong?

- The Cynical Gambit to Make ‘Fake News’ Meaningless

There’s bad information out there that’s not necessarily fake. It’s never as clear-cut as you think… Facebook’s algorithm may not understand the various shades of falsehood.

- Facebook’s Fake News Crackdown: It’s Complicated

OK, so one person’s fake news is another person’s Truth and an entire Macedonian village’s main revenue stream?

And what about Bye-Bye Belgium (hat-tip: Giorgio Clarotti)? Is this 2006 ‘docu-fuction’ journalism? Fake news? Fiction? Satire? Or all of the above?

(Update: a good historical perspective plus some reframing from CJR:

an independent, powerful, widely respected news media establishment is an historical anomaly… Fake news is but one symptom of that shift back to historical norms… provides a … scapegoat for journalists grappling with their diminished institutional power

- The real history of fake news )

And whoah! Is fake news even human?

a large portion of online chatter about the 2016 elections was generated by bots…

- Misinformation on social media: Can technology save us?

(No. Technology = Algorithms, which can - and will - always be gamed.)

Politics

The exploitation of these techniques by political parties is where it starts getting genuinely frightening. In the radical right corner:

“If you want to make big improvements in communications… hire physicists”. Cambridge Analytica… claims to have built psychological profiles … on 220 million American voters… We are inside a machine and we simply have no way of seeing the controls … the most powerful mind-control machine ever invented…

- Google, democracy and the truth about internet search

And over there in the radical left(?) corner:

The Five Star Movement controls … sprawling network of websites and social media accounts that are spreading fake news, conspiracy theories, and pro-Kremlin stories to millions… The leaders of the party are making money with a fake news aggregator.

- Italy’s Most Popular Political Party Is Leading Europe In Fake News And Kremlin Propaganda

So does that make Russia the referee? Uh-oh:

From a nondescript office building in St. Petersburg, Russia, an army of well-paid “trolls” has tried to wreak havoc all around the Internet — and in real-life American communities.

- The Agency (June 2015)

The net result is an American information environment where citizens and even subject-matter experts are hard-pressed to distinguish fact from fiction

- TROLLING FOR TRUMP: HOW RUSSIA IS TRYING TO DESTROY OUR DEMOCRACY (Nov 2016)

And for balance (remember that?):

In their evidence-lite handwringing over Russian mastery of Western electorates, Remainers and Hillary backers are rehabilitating the panic and conspiracy theorising of the McCarthyites of old.

- ‘Putinites on the web’ are the new ‘Reds under the bed’

that Russia took advantage of the social web’s desire to just share things without reading them… may be true, but so does every other media outlet

- No, Russian Agents Are Not Behind Every Piece of Fake News You See

Psychology

So what’s the source of this problem? Somewhere between your ears (and millennia ago in our evolutionary past)

Unless we understand the psychology of online news consumption, we won’t be able to find a cure …

- Why do we fall for fake news?

simply repeating false information makes it seem more true

- Unbelievable news? Read it again and you might think it’s true

As Facebook attempted to capture the fast-moving energy of the news cycle from Twitter… it built a petri dish for confirmation bias … Fake news and sensationalist news would be relatively ineffective without the existing worldview they confirm. But with … distrust of “the system” held by millions of Americans, Facebook provided the accelerant

- How The 2016 Election Blew Up In Facebook’s Face

polarization does not happen … because one side is thinking more analytically, while the other wallows in unreasoned ignorance … subjects who tested highest on measures like “cognitive reflection” and scientific literacy were also most likely to display … “ideologically motivated cognition.”

- How Your Brain Decides Without You

More: every single resource I’ve tagged psychology is fascinating.

(update: since finishing the first version of this post, I think the psychological aspects of fake news, and what they mean for the future of journalism, will be the focus of any eventual post).

Education

Clearly people need to be educated, but in what, and when?

82% of middle-schoolers couldn’t distinguish between an ad labeled “sponsored content” and a real news story…

- Most Students Don’t Know When News Is Fake, Stanford Study Finds

Aside: that one was also tagged native advertising, which reminds me to ask: when a corporation is paying for journalistic coverage, is the result journalism, advertising or fake news?

What we need today is metaliteracy – an ability to make sense of the vast amounts of information in the connected world of social media… educators and policymakers must “demonstrate the link between digital literacy and citizenship.”

- How can we learn to reject fake news in the digital world?

See also: In the war on fake news, school librarians have a huge role to play

So Who is doing What about it?

over 30 news organizations … and tech companies such as Facebook, Twitter, and YouTube to share best practices on how to verify true news stories and stop the spread of fake ones

- Facebook, Twitter, and 30 other orgs join First Draft’s partner network to help stop the spread of fake news

(So glad that’s working!)

Following the controversial firing of the editorial team who managed the Trending Topics… technology that will help prevent fake news stories from showing up in the Trending section

- Facebook to roll out tech for combating fake stories in its Trending topics

Facebook’s application for Patent … describes a sophisticated system for … improve the detection of pornography, hate speech, and bullying… much easier to identify than false news stories.

- Facebook is patenting a tool that could help automate removal of fake news

Free advice for Facebook: when you’re in a hole, stop digging. So firing your editors because they’re biased is only a good idea if you’re going to replace them with something better. And if someone does develop a fake news detector, don’t block it, even temporarily.

a red flag will appear if the site or news is deemed fake, yellow if the source is unreliable or green if it’s ok

- How Le Monde is taking on fake news

(OK as defined by…?)

If you can detect trolls, you can protect the people they’re trolling by muting or putting a warning over the trolls’ posts

- Machine learning can fix Twitter, Facebook, and maybe even America

See also: Hoaxy: A Platform for Tracking Online Misinformation

What about good, old-fashioned, high-quality journalism? Oh.

“…all the fact-checking of Trump’s lies, all the investigative journalism about his failures, even the tapes — none of it meant anything”… we ended up with a filter bubble election.

- The Dissolution of News: Selective Exposure, Filter Bubbles, and the Boundaries of Journalism

More: do I really have to send you to my collection of resources tagged filterbubble again? How about factchecking, then?

And let’s not forget the legislators:

Tech companies may face new legislation after struggling to comply with voluntary code of conduct…

- Facebook, Twitter, and Google are still failing to curb hate speech, EU says

Update: Facebook’s Dec 16 announcement

You don’t need me to enumerate the tactics Facebook is introducing - analyses are everywhere, or you could go straight to the announcement or Zuckerberg’s followup.

The most interesting analyses I saw included (my emphases):

people who want to coordinate to mess with the system will be able to do so fairly easily

- Clamping down on viral fake news, Facebook partners with sites like Snopes and adds new user reporting

If Facebook is going to pay for video, it might want to consider paying for truth, which is also good for business… Lies, propaganda, fake news, hate, and incivility won’t be “fixed” with any product or algorithm or staffing tweaks

- Facebook Steps Up

Relying on third-party fact checkers could lessen scrutiny on Facebook’s verification process and give the company another scapegoat… “labeling stories that have been flagged as false”… [will] fly in the face of the company’s business interests… explicitly ban fake news sites from placing ads … is a nice-sounding gesture that won’t actually do much… Any solution must center on the way News Feed ranks stories… “Fake news” isn’t a glitch in the system, but rather the Like economy working at peak efficiency.

- A Closer Look at Facebook’s Fake-News Fixes

And because there’s always more Firehose …

Here’s some more stuff tagged ‘fake’ on my ReadMe queue that I haven’t gotten to yet:

- All the BS that’s fit to click. (a Medium Collection)

- Propaganda warfare is increasingly shaping narratives, policies and lives around the world - Disinformation Crisis (a Coda deep dive)

So, having redigested all of this a second time around…

First reactions: 1. Is technology the answer?

I think I’m now more confused than I was before I started. What stays with me, however, is best summarised by Jeff Jarvis:

Do we really want to set up Facebook or Google as censors … to decide what is real and fake, true and false?

- Fake News: Be Careful What You Wish For

No, I really, really don’t.

I understand the desire to get technologists to “fix” this.

(update:

Asked who should tackle the problem, respondents gave about equal weight to government, tech companies such as Facebook and Google, and the public…

- Fake news is sickening. But don’t make the cure worse than the disease)

But despite all the efforts into natural language processing, semantic analysis and AI, there will be no simple technical fixes.

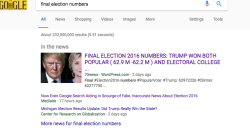

Why? Any technical fix will be vulnerable to the same problem all algorithms face: they can be gamed. Today’s Macedonian teenagers are simply gaming the Facebook algorithm to make cash. Previous generations (anyone here remember content farmers?) gamed Google. They still are:

So if we end up trusting algorithms to distinguish fake news from real, we are allowing the truth to be gamed.

Which is, of course, exactly what’s happening. Why should more technology make this any better?

We’re the problem. Whatever the algorithm, someone will game it for personal gain. And they won’t be exploiting technoloqy - they’ll be exploiting human psychological weaknesses like the backfire effect, confirmation bias, homophily, narcissim and assimilation bias.

First reactions: 2. It’s in your head

Technology only ever augments our own human tendencies, for good or ill. Tech fails if it doesn’t somehow work the way our brains work.

Everyone was so naive about the Internet in the 1990s - we all thought it would bring the world together, as if it could somehow surgically remove from human nature our desire to flock together with the likeminded. Of course, the early internet geeks were likeminded.

Which makes this a wicked problem: not only is human nature hard to change, there are also billions of us, and there’s money being made.

So a better understanding of human psychology looks crucial. But where to apply that knowledge? Education from the youngest age is clearly critical, but it will take decades to create a media-literate and ‘metaliterate’ population via our schools. And by that time, other problems will certainly be upon us.

First reactions: 3. We can’t even define news, let alone fake news

The best way of increasing understanding of an issue amongst an adult population used to be via high-quality journalism, but we wouldn’t have this problem if the newsindustry had not been devastated.

As the first posts I curated above show, one person’s fake news is someone else’s authoritative journalism and a third person’s business model. Technology can’t tell the difference - but more people already trust algorithms with this than they trust editors and journalists.

It’s difficult to see this being reversed in an industry in freefall, increasingly reliant on native advertising.

Looking ahead

I was hoping, when I set out on this post, that by the time I’d gotten here - at the end of the post, after having ploughed back through all those resources and (trying to) organise my thoughts - that an idea, a solution, something would have presented itself. Anything I could develop further in a ‘proper’ blog post, rather than these half-cooked Notes To Self.

That hasn’t happened. Sorry. At the back of my mind is a tickle tagged open web, but it’s not very strong, probably because the Open Web is no better shape than professional journalism.

What I did find are indications that this is not going to get any easier:

If you think this election is insane, wait until 2020… technologies like AI, machine learning, sensors and networks will accelerate. Political campaigns get … so personalized that they are scary in their accuracy and timeliness.

- 5 Big Tech Trends That Will Make This Election Look Tame

More: resources tagged AI.

But I also see good things, and a lot more stuff to read. Maybe something will come.

(Update: perhaps due to Facebook’s announcement, perhaps simply because I’ve had a couple of days to mull things over since first publishing these notes, I think my next post will dive deeper into how psychological flaws have rendered journalism - particularly factchecking, data- and explanatory-journalism - useless.)

In the meantime, I’m grabbing a copy of the 2011 film Detachment for Christmas. Here’s why:

“How are you to imagine anything, if the images are always provided for you?… We must learn to read, to stimulate our own imaginations, to cultivate our own consciousness, our own belief systems. We all need these skills to defend, to preserve, our own minds”

hat-tip: @marcoRecorder via Energia viva.

Related Writing

i.e., BlogPlug alert:

- Storytelling and Branded Reality in the Internet of Experiences (and Trump’s Republican Party) (August 2016)

- How to lie terrifyingly well on social media (August 2016)

- All hail the Trump-o-Meter (September 2016): a modest proposal for real-time factchecking

Related reading

More Stuff I Think

More Stuff tagged algorithm , news , psychology , newsletter , cambridge analytica , facebook , identity , filter bubble , disinformation

See also: Content Strategy , Online Strategy , Social Media Strategy , Psychology , Social Web , Media , Politics