Ensuring Human Agency: A Design Pathway to Human-AI Interaction

my notes ( ? )

I think I'll call these 'centaur papers' - scientific papers describing how best to combine human and AI.

Apparently theory and practice aren't matching up: "While frameworks on augmentation theorize how to best divide work between humans and AI, the empirical literature ... [shows] inconclusive findings. Interaction challenges... call into question how theorized augmentation benefits can be realized."

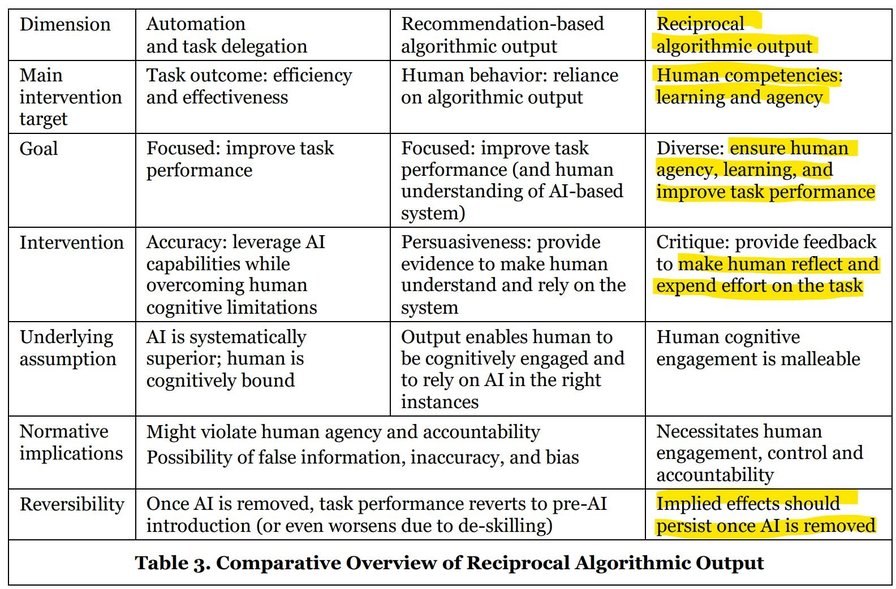

So they've built on "cognitive learning theory, we develop a conceptual model" for designing AI outputs which are "reflection-provoking feedback... not prescribe any actions... [so requiring] humans to expend cognitive effort".

"Reciprocal algorithmic output provides open-ended, reflection-provoking feedback... integrates a user’s input [so] ... the user must expend effort to arrive at an answer." It does this by providing "evaluative feedback and critique... [rather than] explicit, outcome-focused recommendations, and focuses on improving a user’s understanding" of the area being explored, rather than focusing their education on how to use AI.

This enables "three crucial augmentation outcomes...: task performance, human agency, and human learning", rather than reducing people to accepting/rejecting AI output as their skills degrade over time.

Image via Ross Dawson on LinkedIn, whose analysis is also worth reading, identifying these "key concepts:

- Reciprocal Algorithmic Output... encouraging users to expend cognitive effort and maintain control over decisions, fostering human agency and learning.

- Augmentation Outcomes Beyond Productivity: The traditional productivity-focused view of augmentation is deficient; Al systems should be designed to unlock the broader potential of human-Al collaboration beyond mere efficiency gains.

- Building Human Competencies: The paper introduces "reversibility," suggesting that effective human-Al interactions should empower users to perform tasks effectively even without Al, building lasting human competencies rather than fostering over-reliance."

Read the Full Post

The above notes were curated from the full post aisel.aisnet.org/cgi/viewcontent.cgi?article=1156&context=icis2024.Related reading

More Stuff I Like

More Stuff tagged centaur , ai , ai4communities

See also: Digital Transformation , Innovation Strategy , Science&Technology